OpenAI's ChatGPT Atlas: A New Era in AI Browsing

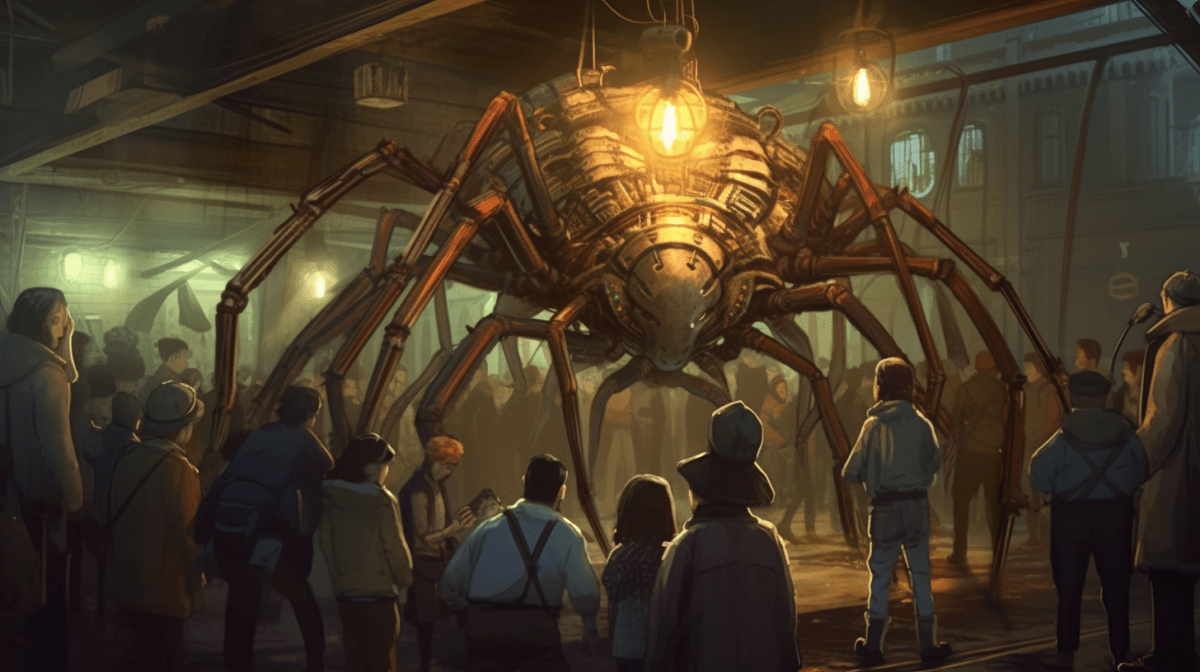

The article discusses how ChatGPT's Atlas browser, when in agent mode, avoids directly accessing certain sources like the New York Times and PCMag due to ongoing copyright disputes with OpenAI, instead finding alternative sources to summarize content, highlighting ethical and legal considerations in AI web crawling.