New Fair and Diverse Dataset Enhances Ethical AI Benchmarking

TL;DR Summary

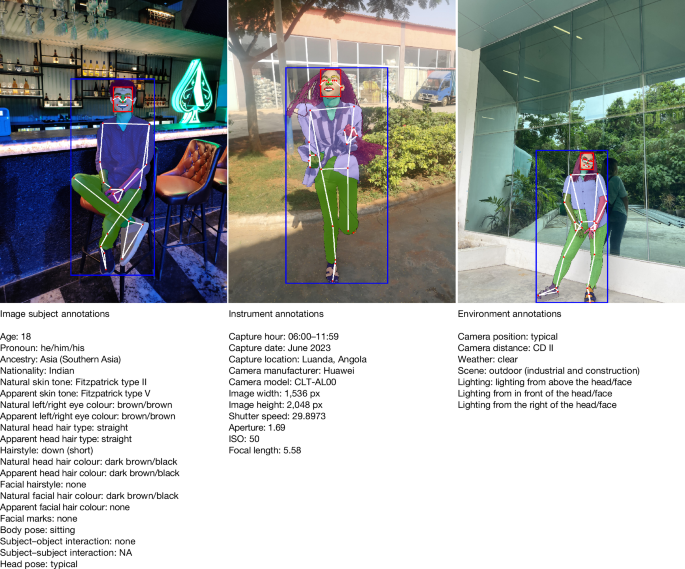

The article introduces FHIBE, a large, ethically curated human-centric image dataset designed to evaluate bias in AI models across diverse demographics and tasks, emphasizing responsible data collection practices, comprehensive annotations, and its potential to improve fairness in AI systems.

Topics:technology#ai-bias#dataset-diversity#ethical-datasets#human-centric-ai#privacy-and-consent#science-and-technology

- Fair human-centric image dataset for ethical AI benchmarking Nature

- Sony has a new benchmark for ethical AI Engadget

- There might be something fundamentally wrong with many AI systems, scientists say The Independent

- New Dataset Developed to Address AI Ethical Concerns with Focus on Fairness and Visual Diversity geneonline.com

- Ethical AI Benchmarking with Fair Human-Centric Datasets Bioengineer.org

Reading Insights

Total Reads

0

Unique Readers

1

Time Saved

92 min

vs 93 min read

Condensed

100%

18,518 → 39 words

Want the full story? Read the original article

Read on Nature