Nvidia's Open Source Toolkit Ensures Safer and More Accurate AI Models.

TL;DR Summary

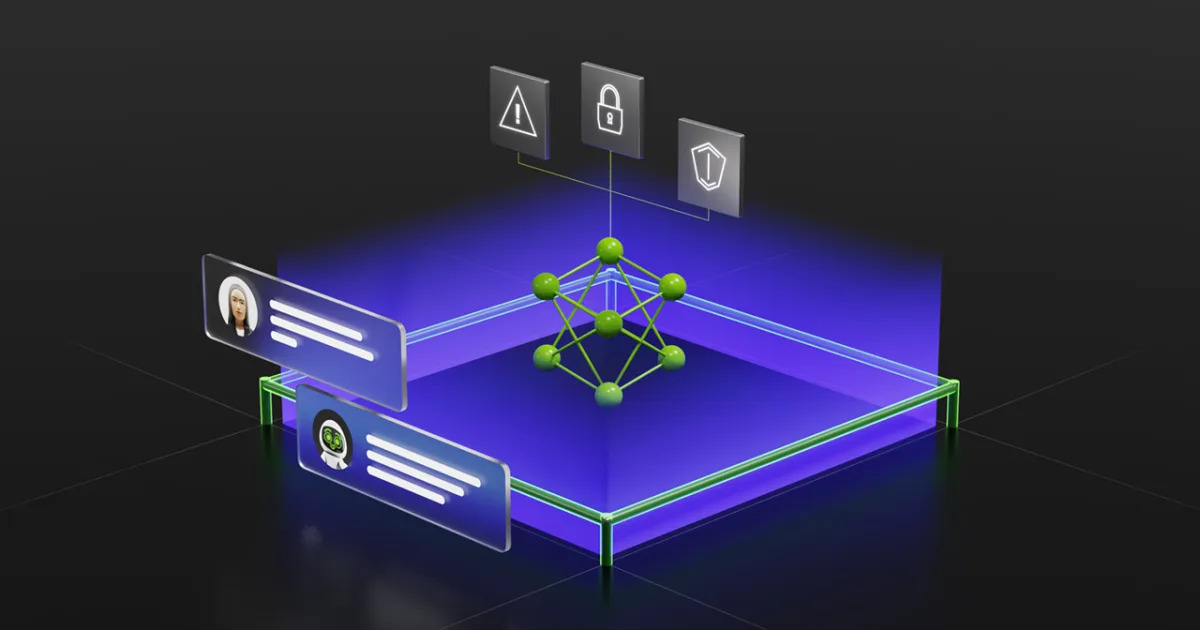

NVIDIA has launched NeMo Guardrails, an open-source tool that helps developers ensure their generative AI apps are accurate, appropriate, and safe. The tool allows software engineers to enforce three different kinds of limits on their in-house large language models (LLMs), including topical guardrails, safety, and security limits. NeMo Guardrails works with all LLMs, and nearly any software developer can use the software. NVIDIA is incorporating NeMo Guardrails into its existing NeMo framework for building generative AI models.

- NVIDIA made an open source tool for creating safer and more secure AI models Engadget

- Nvidia has a new way to prevent A.I. chatbots from 'hallucinating' wrong facts CNBC

- Nvidia Has a Fix for AI Chatbots' Problem: Made-Up Facts, or 'Hallucinations' Barron's

- Nvidia Open Sources Universal 'Guardrails' to Keep Those Dumb AIs in Line Gizmodo

- Nvidia releases a toolkit to make text-generating AI ‘safer’ TechCrunch

Reading Insights

Total Reads

0

Unique Readers

0

Time Saved

2 min

vs 3 min read

Condensed

81%

407 → 77 words

Want the full story? Read the original article

Read on Engadget